The Linux boot process describes how a Linux machine starts up from the moment you turn it on until you get to the login screen. All we are trying to do is to hand over control of the hardware to the operating system. The main purpose for learning about the boot process is so that we can troubleshoot boot issues effectively.

1. Basic Input Output System (BIOS)

Here’s what happens when you switch on a PC. There is this thing called the reset vector. The reset vector is a pointer or memory address, where the CPU should always begin as soon as it is able to execute instructions. The memory location pointed to by the reset vector contains the memory address of where the BIOS is actually stored. The CPU will then jump to this memory address and start executing the BIOS. The BIOS is the first software that will run when a computer is turned on. It has two responsibilities,

- POST – Power On Self Test – checks the integrity of hardware like the Motherboard, CPU, RAM, Timer IC’s, Keyboard, Hard Drives, etc.

- Loads and executes the bootloader.

When the PC starts up, the BIOS program does a POST check and then goes through each device listed in the boot order until it finds a bootable device. It will then load the bootloader from that bootable device and its job is done. The BIOS is “hardcoded” to look at the first sector of the bootable device and load it into memory. This first sector is called the Master Boot Record (MBR).

2. The Master Boot Record

NB: The term MBR is used to refer to the first sector (sector 0) of a bootable device…. Or it can be used to refer to a partitioning scheme.

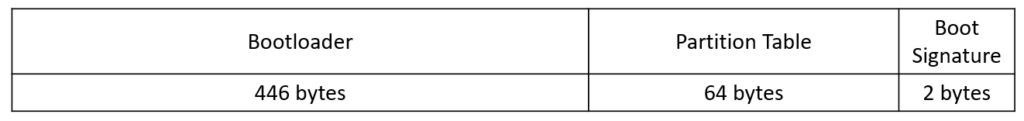

The MBR in this context refers to the first sector of a bootable device. It is a total of 512 bytes (the size of a sector) and is divided into three sections:

- The bootloader section where the code for the bootloader is stored

- The partition table section is where the MBR Partition table for that disk is stored.

- The boot signature is used to identify the storage device – the value is

0x55AA

The way the MBR is divided means that your bootloader has to fit in the 446 bytes section of the MBR. In modern systems, this is not enough, so the bootloader is sometimes split into 2 or more parts or stages with the first stage being located in the MBR.

Stage 1 of the bootloader will be responsible for loading the next stage of the bootloader which can be located somewhere else on the disk where it can occupy more space than the 446 bytes in the MBR.

3. The Bootloader

This is a program that is responsible for loading the operating system into memory as part of the boot process. LILO (Linux Loader) was the default for a long time. The LILO bootloader basically points to the first sector where the kernel is stored on the disk and then loads the kernel into memory. It had lots of limitations so it was soon replaced by the Grand Unified Bootloader GRUB (and now GRUB2)

We will stick to Grub2 for this guide but Grub v1 still follows a similar boot process.

Grub2

Stage 1

Also known as the primary bootloader, this is a 512 byte image (named “boot.img” ) that is located in the MBR. Its only task is to load stage 1.5. The Logical Block Address (LBA) of the first sector of stage 1.5 (core.img) is hardcoded into boot.img. When boot.img executes, it loads this first sector of core.img into memory and transfers control to it.In a typical Linux system, you can find boot.img is located at /boot/grub2/i386-pc/boot.img.

Stage 1.5

Stage 1.5 is an image (called “core.img”) that is located between sector 0 (the MBR) and sector 63 where the first partition starts. You can find out why the first partition starts at sector 63 here. This gives core.img about 32 KiB of space to use and you can confirm its size by listing it as shown below:

$ ls -lh /boot/grub2/i386-pc/*.img

-rw-r--r-- 1 root root 512 Jun 11 08:42 /boot/grub2/i386-pc/boot.img

-rw-r--r-- 1 root root 28K Jun 11 08:42 /boot/grub2/i386-pc/core.imgThe first sector of core.img (which is loaded into memory by boot.img) contains LBAs of the rest of the blocks that make up core.img and a loader that will load the rest of itself into memory. The grub kernel and filesystem modules that are needed to locate Stage 2 /boot/grub (which can be located anywhere) are loaded into RAM during stage 1.5. At this point, GRUB is already running and if anything goes wrong from now until we handover to the kernel, GRUB will drop to a GRUB rescue.

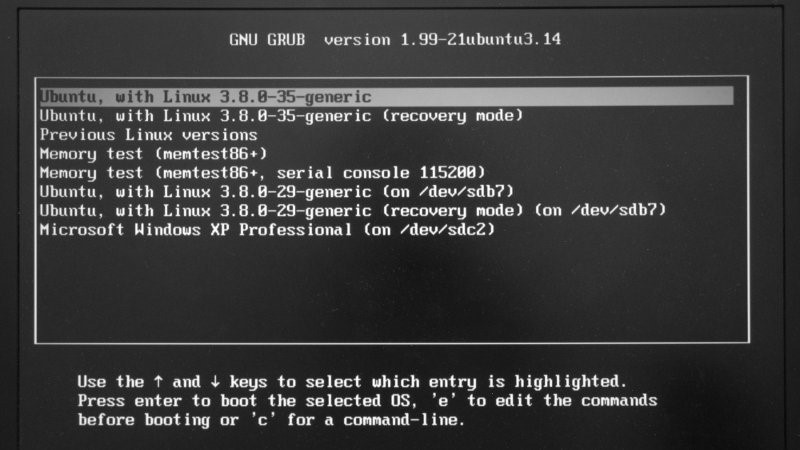

Stage 2

Stage 2 starts when /boot/grub2/grub.cfg is parsed and this is when the GRUB menu is displayed. Stage 2 will load the kernel image (identified by “/boot/vmlinuz-<kernel-version>”) along with the appropriate initramfs images (identified by “/boot/initramfs-<kernel-version>”)NB: GRUB supports chainloading which allows it to boot operating systems that are not directly supported by GRUB, like Windows. When you select a Windows OS, chainloading allows grub to load the Windows bootloader which then continues the boot process to load Windows.

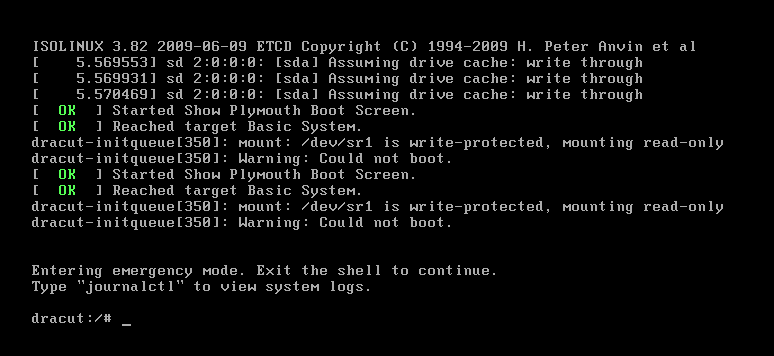

Initramfs and the Chicken-and-Egg problem

The kernel needs to mount the root filesystem which can have one or more of the following properties:

- Can be on an NFS filesystem.

- Storage can be using a RAID configuration.

- May be using LVM.

- May be encrypted.

The kernel is not compiled with the modules needed to mount a filesystem with any of the properties above. These modules, along with the rest of the modules used by the kernel, instead are stored under “/lib/modules” directory. However, in order for the kernel to access these modules, it needs to mount the root filesystem… this is the chicken and egg problem.

The solution to this is to create a very small filesystem called initramfs. Initramfs has all the necessary drivers and modules that the kernel needs to mount the root filesystem. This filesystem is compressed into an archive which is extracted by the kernel into a temporary filesystem (tmpfs) that’s mounted in memory.

The initramfs image is generated by a program called dracut.

Initrd vs initramfs

Both do the same thing but in slightly different ways.

Initrd is a disk image which is made available as a special block device “/dev/ram”. This block device contains a filesystem that is mounted in memory. The drivers needed to mount this filesystem, which for example could be ext3, must also be compiled into the kernel.

Initramfs is an archive (which may or may not be compressed) that contains the files/directories you find in a typical Linux filesystem. This archive is extracted by the kernel into a tmpfs that in then mounted in memory… so no need to compile any filesystem drivers into the kernel as you would for initrd.

Initrd was deprecated in favour of initramfs which was introduced in Linux kernel version 2.6.13.

4. The Kernel

When the kernel is loaded into memory by GRUB2, it first initializes and configures the computer’s memory and configures the various hardware attached to the system, including all processors, I/O subsystems, and storage devices.

The kernel then extracts initramfs into a tmpfs that’s mounted in memory and then uses this to mount the root filesystem as read-only (to protect it in case things don’t go well during the rest of the boot process).

After mounting the root filesystem, the system manager is the first program/daemon to be executed and thus will have a PID of 1. In a SysV system, this daemon is called “init” and SystemD systems, this daemon is systemd.

5.1. SysV-Init Boot Process

The last stage of the Linux boot process is handled by the System Manager. For systems that uses SysV-Init, the kernel starts the first program called “init” which will have a PID of 1. Init is a daemon that runs throughout the lifetime of the system until it shuts down. The first thing the init daemon does is to execute the /etc/rc.d/rc.sysinit script. This script will do the following tasks (just to mention a few):

- Set the system’s hostname

- Unmount initramfs

- Sets kernel parameters as defined in

/etc/sysctl.conf. - Start devfs

- Mount procfs and sysfs

- Dumps the current contents of the kernel ring buffer into

/var/log/dmesg - Process

/etc/fstab(mounting and running fsck) - Enable RAID and LVM

After the rc.sysinit script is executed, the runlevel scripts are executed and these scripts will start the necessary services according to the specified runlevel.

Runlevels

A runlevel describes the state of a system with regards to the services and functionality that is available. There are a total of 7 runlevel which are defined as follows:

- 0: Halt or shutdown the system

- 1: Single user mode

- 2: Multi-user mode, without networking

- 3: Full multi user mode, with networking

- 4: Officially not defined; Unused

- 5: Full multi user with NFS and graphics (typical for desktops)

- 6: Reboot

In most cases when running Linux servers on the cloud, you will be using them in runlevel 3. If you have a Linux machine with a graphical desktop installed, you will be using runlevel 5. When you reboot a system, you will be changing the runlevel to 6 and when you shut it down, you will be changing the runlevel to 0. Runlevel 1 (single user mode) is a very limited state with only the most essential services available and is used for maintenance purposes. For some reason they skipped 4 so runlevel 4 is not used for anything.

The default runlevel of a system is defined in the config file /etc/inittab. If you open this file you will see a line similar to the following:

id:3:initdefault:In this example, the default runlevel is 3. If you want to troll someone you can change that number to 0 or 6.

Runlevel Scripts

As mentioned earlier, a runlevel describes the state of the system with respect to the services and functionality available. These services are started by runlevel scripts and each runlevel has a set of scripts which are located under “/etc/rc.d” as shown below:

$ ls -l /etc/rc.dtotal 60 drwxr-xr-x 2 root root 4096 Aug 26 09:19 init.d -rwxr-xr-x 1 root root 2617 Aug 17 2017 rc drwxr-xr-x 2 root root 4096 Aug 26 09:19 rc0.d drwxr-xr-x 2 root root 4096 Aug 26 09:19 rc1.d drwxr-xr-x 2 root root 4096 Aug 26 09:19 rc2.d drwxr-xr-x 2 root root 4096 Aug 26 09:19 rc3.d drwxr-xr-x 2 root root 4096 Aug 26 09:19 rc4.d drwxr-xr-x 2 root root 4096 Aug 26 09:19 rc5.d drwxr-xr-x 2 root root 4096 Aug 26 09:19 rc6.d -rwxr-xr-x 1 root root 220 Jul 2 06:56 rc.local -rwxr-xr-x 1 root root 20108 Aug 17 2017 rc.sysinit

Each one of the rc.d directories will have scripts that start with “K” or “S”. Scripts that start with “S” are the scripts that are executed when starting the services and ones that start with “K” are scripts that are used to stop/kill the service. After the “K” or “S” is a number which specifies the order in which the services will be started and this is how you define dependencies between services. For example, you will notice that the “network” Start script is set to start before the “ssh” service script since a system will need to have network access before the SSH service can start. Consequently, the network Kill script is set to execute AFTER the ssh service Kill script.

After the runlevel scripts have been executed, the “/etc/rc.d/rc.local” script is executed. This is a script where you can add your own custom bash commands that you want to execute at boot. So if you are not familiar with how to create a background startup service using the runlevel scripts, you can just add your bash commands to start the service in this rc.local script.

After the rc.local script has executed, the system will display the login prompt and done. That’s the boot process for sysv-init systems.

5.2. SystemD Boot Process

SystemD is a system and service manager that was designed to replace SysV-Init which has the following limitations:

- Services are started sequentially even services that do not depend on each other.

- Longer boot times (most because of the point mentioned above)

- No easy and straightforward way to monitor running services.

- Dependencies have to be handled manually so you need very good knowledge of the dependencies involved when you want to modify the runlevel scripts to add a new service.

Every resource that is managed by SystemD is called a unit. A unit (as defined in the man pages) is a plain-text file that stores information about any one of the following:

- a service

- a socket

- a device

- a mount point,

- an automount point

- a swap file or partition

- a start-up target

- a watched file system path

- a timer controlled and supervised by systemd

- a resource management slice or a group of externally created processes.

The only two unit types we will focus on for this guide are “service” and “target” units but there is a very good article on SystemD units you can find on this DigitalOcean article by Justin Ellingwood. SysV-Init runlevels were replaced by systemd targets as shown in the table below:

Table showing the relationshipt between SysV-Init Runlevels and SystemD Targets

| SysV-Init Runlevel | SystemD Start-up Target |

| 0: Halt or shutdown the system | poweroff.target |

| 1: Single User mode | rescue.target |

| 2: Multi-user mode, without networking | multi-user.target |

| 3: Full multi user mode, with Networking | multi-user.target |

| 4: Undefined | multi-user.target |

| 5: Full multi-user mode with networking and graphical desktop. | graphical.target |

| 6: Reboot | reboot.target |

Target files are used to group together units needed for that specific target. These units could be services, devices, sockets, etc and these units are defined as dependencies of that target. There are 3 main ways of defining the dependencies of a target and these are:

- “

Wants=” statements inside the target unit files. - “

Requires=” statements inside the target unit files. - Special “

.wants” directories associated with each target unit file found under the directory/etc/systemd/system

When a service has been configured to start at boot, a symbolic link will be created in the “.wants” directory of the corresponding target. For example, you can configure the apache2 service to start at boot using the following command:

$ systemctl enable apache2

Since the apache2 service has been configured to start at boot, it is now a dependency for the multi-user.target and as such, a symbolic link was created at:

/etc/systemd/system/multi-user.target.wants/apache2.service

When you boot a system into multi-user.target for example, all the unit files found under “/etc/systemd/system/multi-user.target.wants” will be started. Any services that do not depend on each other can start in parallel, making the boot process a bit faster. All the tasks that are handled by the rc.sysinit script in SysV-Init systems are defined as dependencies of the basic.target unit and you can list them using the following command:

$ systemctl list-dependencies basic.target

To check the default target of a system you can run the following command:

$ systemctl get-default

… and to set the default target you can run the following command (set it to graphical.target for example):

$ systemctl set-default graphical.target

If you are curious to know which parts of the start-up process are taking long, you can run the following command:

$ systemd-analyze critical-chain

graphical.target @17.569s

└─multi-user.target @17.569s

└─ce-agent.service @6.061s +6.039s

└─network-online.target @6.061s

└─cloud-init.service @4.239s +1.820s

└─network.service @3.570s +668ms

└─NetworkManager-wait-online.service @3.127s +442ms

└─NetworkManager.service @3.042s +84ms

└─network-pre.target @3.042s

└─cloud-init-local.service @1.491s +1.550s

└─basic.target @1.425s

└─sockets.target @1.424s

└─rpcbind.socket @1.424s

└─sysinit.target @1.424s

└─systemd-update-utmp.service @1.403s +21ms

└─auditd.service @1.266s +136ms

└─systemd-tmpfiles-setup.service @1.235s +29ms

└─systemd-journal-flush.service @426ms +808ms

└─systemd-remount-fs.service @271ms +149ms

└─systemd-fsck-root.service @584542y 2w 2d 20h 1min 48.791s +21ms

└─systemd-journald.socket

└─-.sliceThis is just about all you need to know about systemd with regards to the Linux boot process. I plan on writing more tech articles so make sure to check out the tech section of my blog.

Was just wondering for the MBR part. did some research as follows: >> MBR has last two bytes are ’55’ and ‘aa’ respectively – this will be present as the last two bytes of the MBR for all little-endian systems, like x86, while it will be reversed (‘aa’ then ’55’) for big-endian systems. If you don’t see this, you aren’t looking at the master boot record or it is corrupted. Command to check MBR details : —————————————————————————– # dd if=/dev/xvda bs=512 count=1 | hexdump -C —————————————————————————– # ls -lrt /dev/xvd* lrwxrwxrwx 1 root root 7 Dec 4 00:20 /dev/xvda ->… Read more »

That’s an amazing post Tinotenda I would have ever read around the topic (Boot Process in Linux) 🙂

It would be great if you can also create a Video and post on your Channel (trying2adult) to explain to the people around the video

Had a look there – seems cool !

– https://www.youtube.com/channel/UC2YoJYsMAs4Pb-H90y_Ij6Q/featured

This was very informative, thank you for sharing Sir.

“The chicken or the egg” …. you killed it there man. Great explanation on the whole boot process